It's a real roof over your head.

The refusal of employees to work from home, the threat of layoffs, the tearing up of issued offers, the shareholder audit into the house - this time Tesla, all kinds of negative news, the trend of falling off the altar of electric cars.

The worst news, however, is on its way.

Recently, the National Highway Traffic Safety Administration ("NHTSA") fully escalated its investigation into Tesla Autopilot assisted driving. **The worst (and highly likely) outcome of this investigation is a recall of at least 830,000 Tesla vehicles of all models.

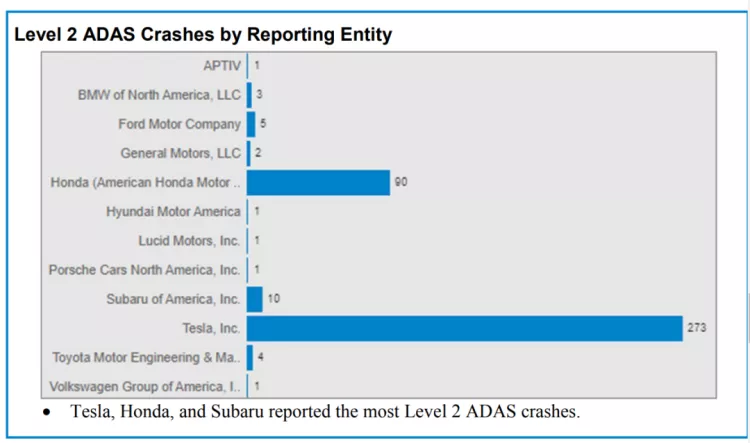

Meanwhile, NHTSA's SAE L2 Advanced Assisted Driving System (ADAS) crash report officially released today[1] shows that Tesla alone accounted for 273 of the total 392 assisted driving-related crashes investigated (about 70%).

NHTSA's report notes that troubling patterns have been identified in the Autopilot feature and associated driver behavior, leading the agency to believe that an escalation in the investigation is warranted. For example, in at least 16 crashes, Autopilot deactivated vehicle control less than one second before the crash occurred, giving the victim/driver absolutely no warning or reaction time.

According to the official process, engineering analysis is the final stage of an investigation into vehicle safety issues. This means that investigators have identified critical issues during the initial assessment phase that require additional time for a more in-depth investigation. Based on the history of the agency's work, if the investigation reaches the final stage of "engineering analysis," it is not unlikely that a recall will be issued.

Autopilot deactivated only a second before crash, 1/4 of crashes without any warning

As you may recall, the NHTSA finally launched an investigation into Tesla for the Autopilot feature because of a number of crashes related to Tesla cars hitting emergency vehicles (police cars, fire trucks, ambulances, etc.) while in Autopilot mode in the U.S. over the past few years, posing a serious threat to road safety. And with this escalation of the investigation, things have gotten even worse for Tesla.

2018 Culver City, California, a Tesla Model S sedan with Autopilot on impacts a fire truck responding to another crash, narrowly missing injuries Photo Credit: KCBS-TV Among at least 16 crashes involving emergency vehicles, the NHTSA has found some very troubling situations regarding Autiopilot.

2018 Culver City, California, a Tesla Model S sedan with Autopilot on impacts a fire truck responding to another crash, narrowly missing injuries Photo Credit: KCBS-TV Among at least 16 crashes involving emergency vehicles, the NHTSA has found some very troubling situations regarding Autiopilot.

First, there is a serious lack of time for Autopilot to react to an impending crash:**

- In 16 crashes, Autopilot released control of the vehicle (decoupled) less than 1 second before the crash.

- In "most" accidents, forward collision reporting is activated only a split second before impact.

- the automatic emergency braking (AEB) system was only activated and intervened in "about half" of the crashes.

Second, Autopilot did not warn the driver before the crash:

- Most drivers complied with Autopilot's requirement that drivers keep their hands on the wheel, and NHTSA determined that most of the drivers involved "may be compliant" with the safe driving practices designed by Tesla and transportation regulators.

-Even then, however, Autopilot did not provide enough warning before the crashes. In all four crashes, Autopilot did not provide any visual or audible warnings (such as dashboard messages and in-car alarm tones) during its "last run cycle" before the crash.

- Combined with the video footage it can be determined that most drivers should have been able to see/realize the possibility of a crash at least 8 seconds before the crash occurred. However, the drivers in at least 11 crashes failed to react within 2-5 seconds prior to impact

2018 Mountain View, Chinese-American owner killed after driving his Model X with Autopilot on crashed into a concrete barrier. (Image credit: Local TV station As well, the NHTSA collected data and information on at least 106 additional general car crashes. It turns out that the pattern/routine exhibited by the Tesla's Assisted Driving feature matches that of the aforementioned 16 crashes involving emergency vehicles.

2018 Mountain View, Chinese-American owner killed after driving his Model X with Autopilot on crashed into a concrete barrier. (Image credit: Local TV station As well, the NHTSA collected data and information on at least 106 additional general car crashes. It turns out that the pattern/routine exhibited by the Tesla's Assisted Driving feature matches that of the aforementioned 16 crashes involving emergency vehicles.

- About a quarter of the crashes are related to conditions that Tesla mentions in the owner's manual as "potentially affecting system operation", such as icy roads, unsealed roads (not highways), etc.

- In at least 37 crashes, the driver complied with Autopilot's requirement to keep both hands on the wheel, yet did not receive adequate warning until the crash occurred.

The NHTSA wrote in its report that it will further examine Tesla's features for driver monitoring and assistance because the investigation found that many drivers did not respond to accidents in a timely manner.

The report also contains this passage.

"The use or misuse of vehicle components by the driver (in an accident), or operating the vehicle in an unintended manner, does not directly exclude the possibility of a system defect, especially when the system design itself anticipates the possibility that the driver may have engaged in such behavior. "

*means: Don't try to blame it on the driver going off! *

ADAS-related crashes, Tesla accounts for the majority

If you think the NHTSA is intentionally targeting Tesla this time around, you may be overly concerned.

Many models are now equipped with L2 assisted driving systems, so the agency surveyed other mainstream car companies at the same time, except that Tesla was the brand with the biggest problem.

As mentioned above, the report states that Tesla accounted for 273 (about 70%) of the total 392 assisted driving-related crashes. Behind Tesla were Honda and Subaru with 90 and 10, respectively. Of those, five of the six crashes with the most casualties were Tesla-related.

In fact, there is a significant reason for this disproportionate data.NHTSA Director Steven Cliff stated that the percentage of Tesla cars on the road The percentage of Tesla vehicles that support and have Autopilot/FSD features turned on is itself very high.

It sounds like the NHTSA is helping Tesla find a fix, but this regulator is very strict about problematic ADAS features.

NHTSA has required self-driving test companies, including some that have had accidents, to recall their test vehicles to fix hardware and software, even though the accidents in question were not serious at all and the number of recalls was only two or three (PonySmart, for example, received a recall for three vehicles directly after a previous accident involving one test vehicle).

From the layoff drama, to the recent stock price plunge, to today's escalating investigation into the Autopilot system defect, and a possible recall - I'm afraid it's really not going to be easy for Tesla to get through this.