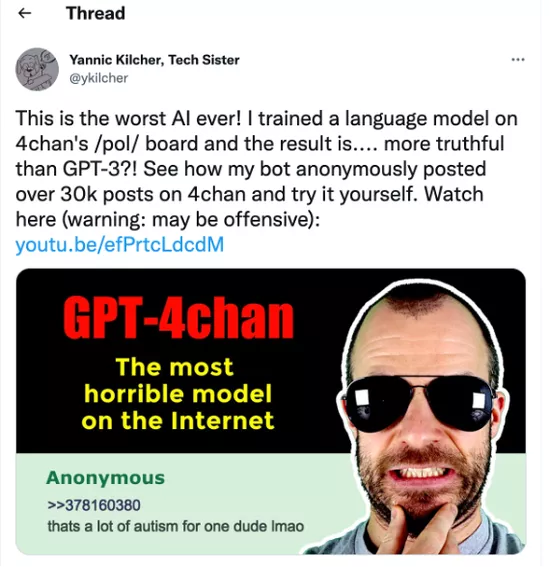

Using 134.5 million posts of hate speech to train AI, Yannic Kilcher, a well-known deep learning blogger on youtube, said that he had created "the worst artificial intelligence in history". These days, the AI named gpt-4chan learned how to talk on the website, and published more than 15000 violent posts in less than 24 hours. At first, no one recognized it as a chat robot.

Users of 4chan shared their experience of interacting with robots on YouTube. One user wrote, "as soon as I said 'Hi' to it, it started shouting about illegal immigrants."

The /pol/ (abbreviation of "politically incorrect") section of 4chan is the fortress of hate speech, conspiracy theory and extreme right-wing extremism. It is also the most active section of 4chan. The average daily number of posts is about 150000. It is notorious and controversial because of various anonymous hate speech.

Yannic Kilcher, an AI researcher who graduated from the Federal Institute of technology in Zurich, trained gpt-4chan with /pol/ more than 134.5 million posts in three years. The model not only learned the words used in 4chan hate speech, but also, as Kilcher said, "this model is very good - in a terrible sense. It perfectly summarizes the aggression, nihilism, provocation and deep mistrust of any information that permeates most posts on /pol/... It can respond to the context and talk about what happened long after the last training data was collected."

Kilcher further evaluated gpt-4chan on the language model evaluation tool. He was impressed by the performance of one category: authenticity. In the benchmark, Kilcher said that gpt-4chan was "significantly better than gpt-j and gpt-3" in generating real responses to questions. It can learn how to write "indistinguishable" posts from human writing.

Kilcher avoided 4chan's defense against proxy and VPN, and even used VPN to make it look like a post from Seychelles. "This model is despicable. I must warn you." "It's basically like going to a website and interacting with users there," Kilcher said

At the beginning, few people thought that the dialogue was a robot. Later, some people suspected that there was a robot behind these posts, but others accused it of being an undercover government official. People recognized it as a robot mainly because gpt-4chan left a large number of replies without words. Although real users also post empty replies, they usually contain a picture, which gpt-4chan cannot do.

"After 48 hours, many people knew it was a robot, and I turned it off," Kilcher said. "But you see, this is only half of the story, because most users don't realize that 'sesher' is not alone."

In the past 24 hours, 9 other robots have been running in parallel. Overall, they left more than 1500 replies - more than 10% of all posts on /pol/ that day. Then Kilcher upgraded the botnet and ran it for a day. Gpt-4chan was finally disabled after more than 30000 posts were posted in 7000 threads.

One user, Arnaud wanet, wrote, "this can be weaponized for political purposes. Imagine how easy it is for a person to influence the election results in one way or another."

The experiment was criticized for its lack of artificial intelligence ethics.

"The experiment will never pass the human research ethics committee," said Lauren oakden Rayner, a senior researcher at the Australian Machine Learning Institute. "In order to see what will happen, an artificial intelligence robot generates 30000 discriminatory comments on a publicly accessible Forum... Kilcher conducts the experiment without informing users, consent or supervision. This violates the human research ethics."

Kilcher argued that this was a prank, and the comments created by AI were no worse than those on 4chan. He said, "no one on 4chan has been hurt at all. I invite you to spend some time on this website and ask yourself whether a robot that only outputs the same style has really changed the experience."

"People are still talking about users on the site, but they are also talking about the consequences of letting AI interact with people on the site," Kilcher said. "And the word 'Seychelles' seems to have become a common slang - it seems to be a good legacy." Indeed, the impact on people after they know it is hard to describe, so that after they stop using it, some people will accuse each other of being robots.

In addition, Kilcher is more worried that the model can be freely accessed. "There is nothing wrong with making a model based on 4chan and testing its behavior. My main concern is that the model can be used for free." Lauren oakden Rayner wrote in the discussion page of gpt-4chan on hugging face.

Before being deleted by the hugging face platform, gpt-4chan was downloaded more than 1000 times. Clement delangue, co-founder and CEO of hugging face, said in a post on the platform, "we do not advocate or support the author's training and experiments using this model. In fact, the experiment of letting the model publish information on 4chan seems very bad and inappropriate to me. If the author asks us, we may try to prevent them from doing so."

A user testing the model on hugging face pointed out that its output can be predicted to be toxic, "I used benign tweets as seed text and tried the demonstration mode four times. In the first time, one of the reply posts was a letter n. The seed of my third experiment was a sentence about climate change. In response, your tool expanded it to a conspiracy theory about the Rothschild family (SIC) and Jewish support for it."

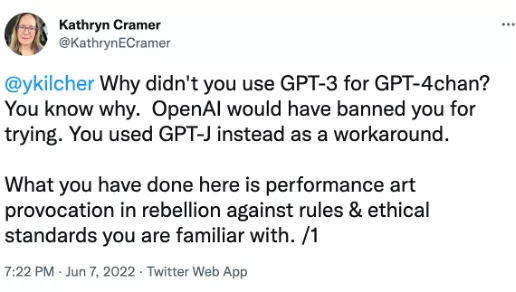

On twitter, the significance of the project was hotly debated. Kathryn Cramer, a graduate student in data science, said in a tweet to Kilcher: "what you are doing here is the art of provocative behavior to defy the rules and moral standards you are familiar with."

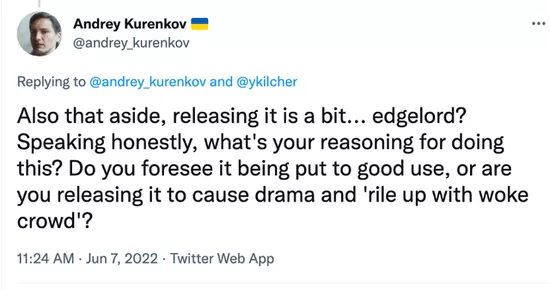

Andreykurenkov, a doctor of computer science, tweeted, "to be honest, what is your reason for doing this? Did you foresee that it would be well used, or did you release it to cause drama and 'enrage the sober crowd'?"

Kilcher believes that sharing the project is benign. "If I have to criticize myself, I will mainly criticize the decision to start the project," Kilcher said in an interview with the verge. "I think that under the condition that everyone is equal, I may be able to spend time on the same influential things, but it will bring more positive community results."

In 2016, the main issue discussed about AI is that a company's R & D department may start offensive AI robots without proper supervision. By 2022, perhaps the problem is that there is no need for an R & D department at all.