In the field of data center and AI Artificial Intelligence, NVIDIA has obvious GPU advantages, and AMD is also catching up step by step. Recently, Microsoft announced that it was the first to purchase AMD's mi200 series accelerated graphics card for large-scale AI training in the cloud. At the recent build 2022 conference, Microsoft CTO Kevin Scott announced that azeure will become the first public cloud service to deploy amd flagship mi200 series GPU for large-scale AI acceleration**

The mi200 series accelerator card was released last November. Amd said that its performance was five times faster than that of NVIDIA A100 accelerator card, especially in fp64 computing.

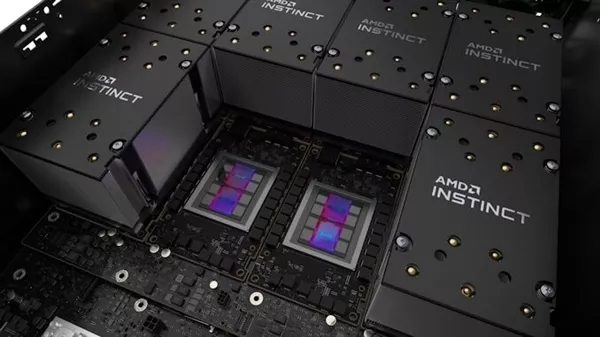

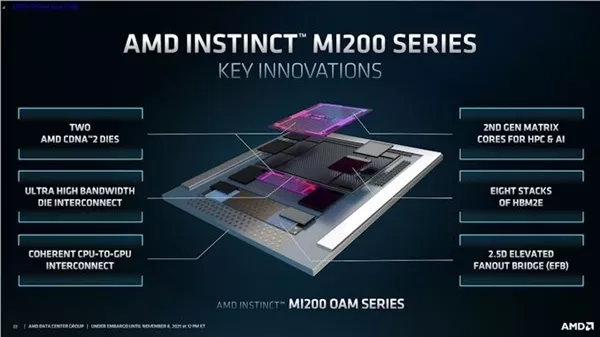

Mi200 series is upgraded to a new cdna2 computing architecture, combined with the upgraded 6nm FinFET process, 58billion transistors, and uses 2.5D EFB bridging technology. It is the industry's first multi die integrated packaging (MCM) with two cores integrated internally.

The new series is divided into two models. The insight mi250x integrates 220 computing units, 14080 stream processor cores, with a maximum frequency of 1.7GHz, and 880 second-generation matrix cores. The peak performance is: fp16 half precision 383tflops, fp32 single precision /fp64 single precision 47.9tflops, fp32 single precision /fp64 double precision matrix 95.7tflops, int4/int8/bf16 383tflops.

Memory / video memory is matched with 128GB hbm2e with 8192 bit width, frequency of 1.6GHz, peak bandwidth of 3276.8gb/s, and supports full chip ECC.

The whole card adopts OAM module form (PCIe expansion card form will also be introduced in the future), supports PCIe 4.0 x16, passive cooling (system cooling), typical power consumption of 500W, peak power consumption of 560W**

The insict mi250 is reduced to 208 computing units and 13312 stream processor cores. All performance indicators are reduced by about 5.5%, and other specifications remain unchanged.