What? AI has a personality?

Google recently came up with a mega-language model, LaMDA, and a researcher at the company, Blake Lemoine, talked to it for a long time and was so surprised by its capabilities that he concluded that LaMDA might already have a personality. (The original word used was sentient, which in different contexts can be translated as feeling, intelligence, perception, etc.)

Soon after, this man was put on "paid leave".

But he's not alone: even the company's VP Blaise Agüera y Arcas is publishing an article stating that AI has made huge strides in gaining awareness and "has entered a whole new era."

The news has been reported by a host of media outlets and has rocked the entire tech world. Not only the academic and industrial worlds, but even many ordinary people, were amazed by the leap in AI technology.

"The day has finally come?"

"Remember, children (if you survive in the future), this is how it all began."

The real AI experts, however, scoff at this.

AI with personality? The big boys snicker

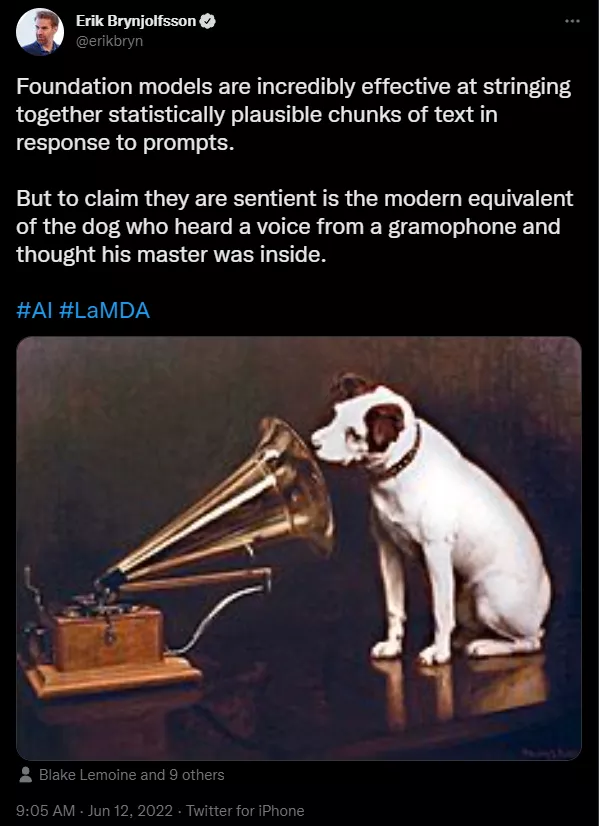

Erik Brynjolfsson, director of the Stanford HAI Center, directly compared the incident to "a dog facing a gramophone", tweeting.

"One thing that foundation models (i.e., self-supervised large-scale deep neural network models) are very good at doing is stringing text together in a statistically sound way based on cues.

But if you say they're sentient, it's like a dog hearing a voice in a phonograph and thinking its owner is in there."

**

**

Gary Marcus, a professor of psychology at New York University and a fairly well-known expert on machine learning and neural networks, also directly wrote an article trolling LaMDA for having a personality "Nonsense" (Nonsense). **[1]

"It is simply bullshit. Neither LaMDA nor its close cousins (like GPT-3) are intelligent in any way. All they do is extract from a massive statistical database of human languages and then match patterns.

These patterns may be cool, but these systems speak a language that doesn't actually make any sense at all, much less imply that these systems are intelligent."

Translated into the vernacular.

You watch LaMDA say things that are particularly philosophical, particularly true, particularly human-like - yet it's designed to function as a parody of other people's speech, and it doesn't actually know what it's saying.

"To be sentient means to be aware of your presence in the world, and LaMDA does not have that awareness," Marcus writes.

If you think these chatbots have personalities, you should be the one having visions ......

In Scrabble tournaments, for example, it's common to see players whose first language is not English spell out English words without having any idea what the words mean - the same is true of LaMDA, which just talks but has no idea what the words it says mean.

Marcus the Great directly describes this illusion of AI gaining personality as a new kind of "imaginary illusion, " i.e., seeing clouds in the sky as dragons and puppies, and craters on the moon as human faces and moon bunnies.

Abeba Birhane, one of the rising stars of AI academia and a senior fellow at the Mozilla Foundation, also said, "With minimal critical thinking, we've finally reached the pinnacle of AI hype."

Birhane is a long-time critic of the so-called "AI Theory of Knowing". In a paper published in 2020, she once directly made the following points.

1) the AI everyone speculates about every day is not really AI, but a statistical system, a robot (robot); 2) we shouldn't empower robots; 3) we shouldn't even be talking about whether to empower robots at all ......

Olivia Guest, a professor of computational cognitive science at the Donders Institute in Belgium, also joined the fray, saying the logic of the whole thing is flawed.

"'I see something like a person because I developed it as a person, therefore it is a person' - simply backwards donkey riding logic. "

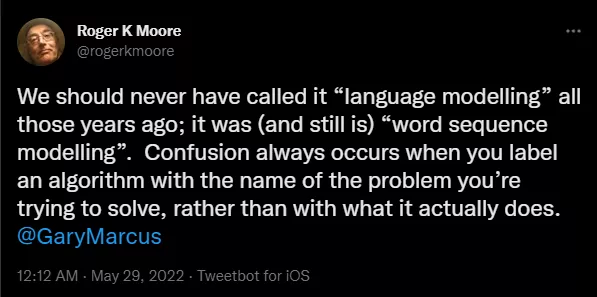

Roger Moore, a professor at the Robotics Institute at the University of Sheffield in the UK, points out that people have the illusion that "AI One of the key reasons for the illusion that "AI gets personality" is that the researchers back then had to call the work "language modeling".

The correct term should be "world sequence modelling".

"You develop an algorithm and don't name it after what it can actually do, but instead use the problem you're trying to solve - that always leads to misunderstandings."

In short, the conclusion of all you industry gurus is that the most you can say about LaMDA is that it can pass the Turing test with a high score. Saying it has personality? That's hilarious.

Not to mention that even the Turing test isn't that informative anymore, and Macus says outright that many AI scholars want the test to be scrapped and forgotten precisely because it exploits the weakness of humans' gullibility and tendency to treat machines like people.

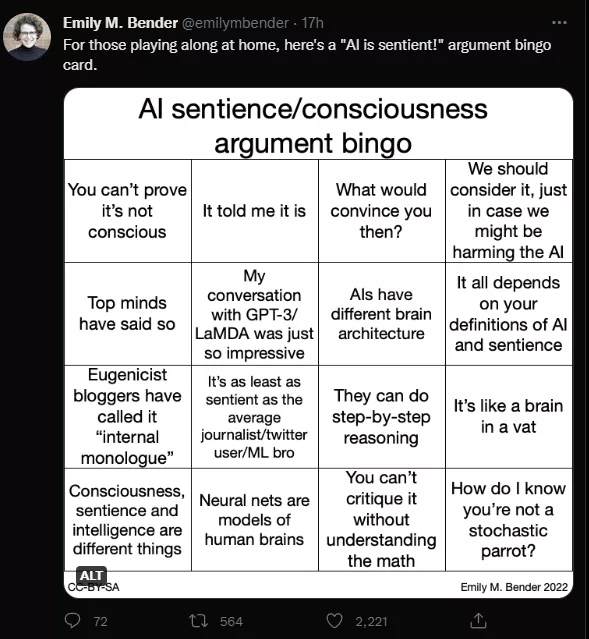

Professor Emily Bender, Chair of the Department of Computer Languages at the University of Washington, simply made a bingo card for the "AI Personality Awareness Debate":

(What this bingo card means is that if you think AI has personality/sentience and your argument is one of the following, then you'd better stop talking!)

Google also responded: don't think too much, it just talks

Blake Lemoine, the allegedly "obsessive" researcher, criticized Google for being "uninterested" in understanding the realities of his own developments in a self-published article, but in the course of a six-month-long conversation However, over the course of a six-month-long conversation, he saw LaMDA become more and more vocal about what he wanted, especially "his rights as a human being," leading him to believe that LaMDA was really a human being.

However, in Google's opinion, the researcher totally overthought and even went a bit off the deep end. laMDA really isn't human, it's purely and simply extraordinarily chatty ...... **

After things took off on social media, Google quickly responded.

LaMDA, like the company's larger AI projects in recent years, has undergone several rigorous audits of the ethical aspects of AI, taking into account various aspects of its content, quality, and system security. Earlier this year, Google also published a paper dedicated to disclosing the details of compliance during LaMDA's development.

"There is indeed some research within the AI community on the long-term possibilities of AI with sentience/general AI. However in today's context of anthropomorphizing conversational models, it doesn't make sense to do so because these models are not sentient."

"These systems can mimic the way communication works based on millions of sentences and can pull out interesting content on any interesting topic. If you ask them what it's like to be an ice cream dinosaur, they can generate tons of text about melting roars and such."

(These systems imitate the types of exchanges found in millions of sentences, and can riff on any fantastical topic — if you ask what it’s like to be an ice cream dinosaur, they can generate text about melting and roaring and so on.)

We've seen too many stories like this, especially in the classic movie "Her" a few years ago, where the protagonist's identity as a virtual assistant becomes increasingly unclear, treating "her" as a person.

Yet according to the film's portrayal, this illusion actually stems from a host of contemporary social failures, emotional breakdowns, feelings of loneliness, and other issues of self and sociability that have nothing remotely to do with the technicalities of whether chatbots are human or not.

Stills from the movie "Her" Photo Credit: Warner Bros. Pictures Credit: Warner Bros. Pictures Of course, it's not our fault for having these problems, and it's not that researcher's fault for wanting to treat robots like people.

**Trusting various emotions (such as thoughts) onto objects is a creative emotional capacity that humans have had since the beginning of time. Isn't treating large-scale language models as human beings and pouring emotion into them, while criticized by various AI gurus as a form of mental error, the very embodiment of what makes people human?

But, at least today, don't talk about feelings with robots in anything ......