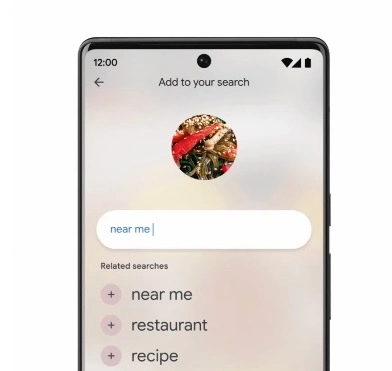

In April this year, Google launched a new "multiple search" function, which provides a method of web search using text and images at the same time. Today, at Google's I / O developer conference, the company announced an extension to this feature, called "multisearch near me" This new feature, which will be launched later in 2022, will allow Google App users to combine images or screenshots with "near me" text to guide options to local retailers or restaurants that will have clothing, household goods or food you are searching for.

Google also announced an upcoming multi search function in advance, which seems to be designed for AR glasses. It can intuitively search multiple objects in a scene according to what users "see" through the viewfinder of smartphone camera.

With the new "near me" multi search query, users will be able to find local options related to your current visual and text-based search combination. For example, if you are working on a DIY project and encounter a part that needs to be replaced, you can take a picture of the part with the camera of your mobile phone to identify it, and then use this information to find a local hardware store with inventory. Google explained that this is no different from the way multiple search works - it just adds local components.

Initially, the idea of multiple search was to allow users to ask questions about the object in front of them and refine these results through color, brand or other visual attributes. Today, this feature works best in shopping search because it allows users to narrow product search in a way that standard text-based web search can sometimes be difficult to do. For example, users can take a picture of a pair of sneakers, and then add text to ask to see the Blue Sneakers, so that they can see the shoes with the specified color. They can also choose to visit the website of sports shoes and buy them immediately. Now expand to include the "near me" option, but further limit the results to point users to local retailers with the product.

This function works similarly in helping users find local restaurants. In this case, users can search according to the photos they find on the food blog or elsewhere on the Internet to find out what the dish is and which local restaurants may have this option on the menu, which can be served in class, picked up or delivered. Here, Google search combines images with the intention of looking for nearby restaurants, and will scan millions of images, comments and communities to contribute to Google map to find local restaurants.

Google said the new "near me" feature will provide English services worldwide and will be extended to more languages over time.

A more interesting addition to multiple search is the ability to search in one scene. Google said that in the future, users will be able to Pan their camera around to understand multiple objects in the wider scene. This function can even be used to scan bookshelves in bookstores and show users which might be more useful to him.

"To make this possible, we not only brought together computer vision and natural language understanding, but also combined it with technical knowledge on networks and devices," said Nick bell, senior director of Google search. "Therefore, this possibility and ability will be huge and significant," he said.

The company entered the AR market in advance by releasing Google glasses, but did not confirm that any new ar glasses type of equipment is under development, but hinted at this possibility. Now with artificial intelligence systems, what may happen today -- and what may happen in the next few years -- is just a way to unlock many opportunities. In addition to voice search, desktop and mobile search, the company believes that visual search will also become an important part of the future.

"Now there are 8 billion visual searches with lens on Google every month, which is three times that of a year ago. We must see from people that people have an appetite and desire for visual search." "What we need to do now is go deep into use cases and determine where this is most useful. I think visual search must be a key part when we think about the future of search," he said

It is reported that the company is working on a secret project, code named Project iris, to design a new ar head display, which is expected to be released in 2024. It is easy to imagine that not only this scene scanning capability can run on such devices, but also any kind of image plus text (or voice) search function can also be used on AR head display. Imagine, for example, seeing your favorite pair of sneakers again, and then letting the device navigate to the nearest store, you can buy it.

Prabhakar Raghavan, senior vice president of Google search, said at the Google I / O conference: "looking ahead, this technology can go beyond daily needs and help solve social challenges, such as supporting conservationists to identify plant species in need of protection, or helping disaster relief workers quickly find donations when needed."

Unfortunately, Google did not provide a timetable for when it expected to put the scene scanning feature into users' hands, as it was still "under development".