AgI by 2029? Gary Marcus: no way. I bet $100000. "If someone says (deep learning) has hit the wall, they just need to make a list of things that deep learning can't do. Five years later, we can prove that deep learning has done."

On June 1, the reclusive Geoffrey Hinton was invited to the podcast program of Professor Pieter abbeel of UC Berkeley. The two had a 90 minute conversation, from masked auto encoders and alexnet to pulse neural network.

In the program, Hinton explicitly challenged the idea that "deep learning hit the wall".

The saying "deep learning hit the wall" comes from an article in March by Gary Marcus, a well-known AI scholar. To be exact, he believes that "pure end-to-end deep learning" is almost at an end, and the whole AI field must find a new way out.

Where is the way out? According to Gary Marcus, symbol processing will have a bright future. However, this view has never been taken seriously by the community. Hinton even said before: "any investment in symbol processing methods is a huge mistake."

Hinton's public "rebuttal" in the podcast obviously attracted Gary Marcus' attention.

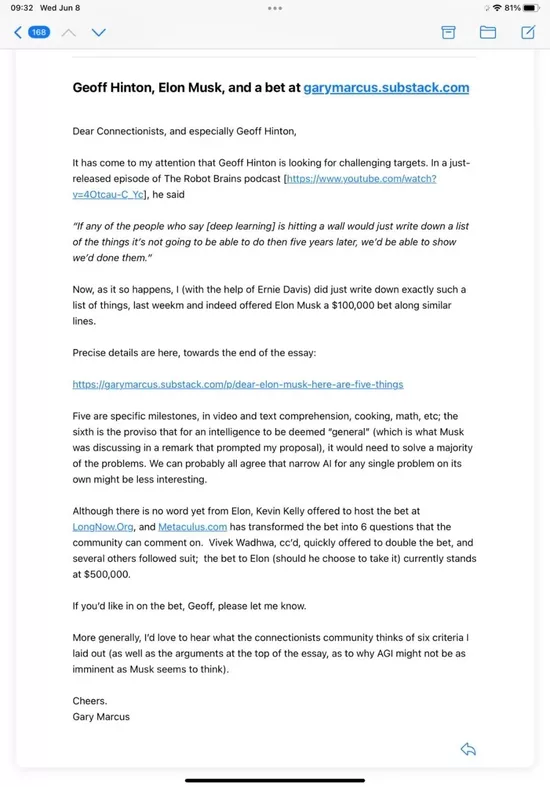

Just over ten hours ago, Gary Marcus sent an open letter to Geoffrey Hinton on Twitter:

The letter said: "I noticed that Geoffrey Hinton is looking for some challenging goals. With the help of Ernie Davis, I have indeed written down such a list. Last week, I also issued a $100000 bet to musk."

What's wrong with musk here? The reason also starts from a twitter post at the end of May.

$100000 bet with musk

For a long time, AgI has been understood as the kind of AI described in films such as space odyssey (HAL) and iron man (Jarvis). Unlike AI currently trained for specific tasks, AgI is more like a human brain and can learn how to complete tasks.

Most experts believe that AgI will take decades to achieve, while some even believe that this goal will never be achieved. In the survey of experts in this field, it is estimated that there will be a 50% chance to realize AgI by 2099.

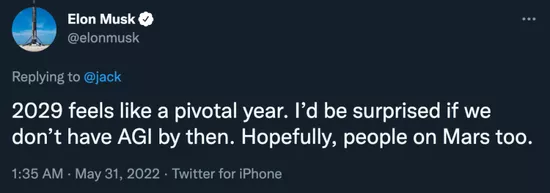

In contrast, musk is more optimistic, and even publicly expressed on Twitter: "2029 is a key year. I would be surprised if we have not achieved AgI at that time. I hope the same is true for people on Mars."

Gary Marcus, who disagreed, quickly asked, "how much are you willing to bet?"

Although musk didn't reply to this question, Gary Marcus continued to say that he could play in long bets with the amount of US $100000.

According to Gary Marcus, Musk's relevant views are not reliable: "for example, you said in 2015 that it would take two years for a car to achieve full automatic driving. Since then, you have said the same thing almost every year, but now full automatic driving has not been achieved."

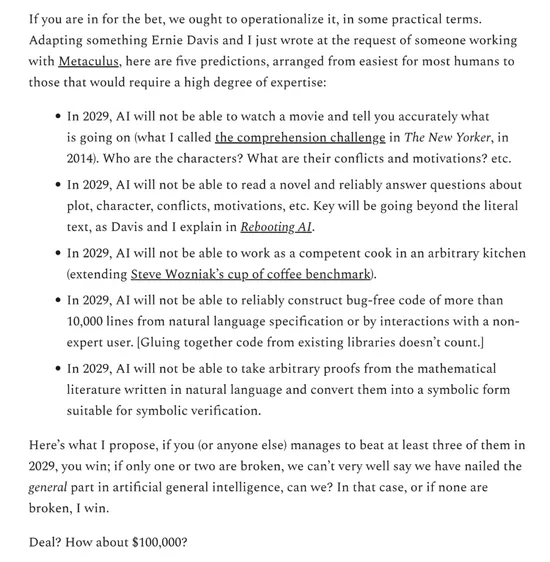

He also wrote in his blog five criteria to test whether AgI has been achieved, as the content of the bet:

In 2029, AI cannot understand movies and tell you exactly what is happening (who the characters are, what their conflicts and motives are, etc.);

In 2029, AI will not be able to read novels and reliably answer questions about plots, characters, conflicts, motives, etc;

In 2029, AI cannot serve as a competent cook in any kitchen;

In 2029, AI cannot reliably build more than 10000 lines of error free code through natural language specification or interaction with non expert users (it does not count to glue the code in the existing library together);

In 2029, AI will not be able to arbitrarily obtain evidence from mathematical literature written in natural language and convert it into a symbol form suitable for symbol verification.

"This is my suggestion. If you (or anyone else) try to complete at least three in 2029, you will win. Deal? How about $100000?"

With the popularity of more people, the amount of this bet has risen to $500000. However, so far, musk has no reply.

Gary Marcus: AgI is not as close as you think

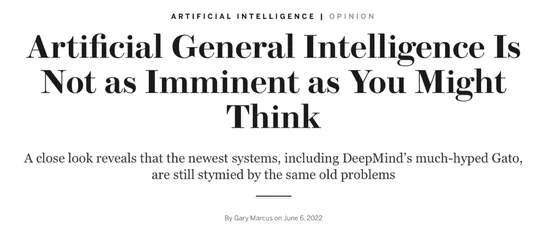

On June 6, Gary Marcus published an article in Scientific American, reiterating his view that AgI is not close at hand.

For ordinary people, the field of artificial intelligence seems to be making great progress. According to media reports, openai's dall-e 2 seems to be able to convert any text into images. Gpt-3 knows everything. The Gato system released by deepmind in May has good performance on every task A senior manager of deepmind even boasted that he had begun to seek general artificial intelligence (AGI) and that AI had the same level of intelligence as human beings

Don't be fooled. Machines may one day be as smart as people, or even smarter, but far from now. There is still a lot of work to be done to create machines that truly understand and reason about the real world. What we really need now is less flattery and more basic research.

To be sure, artificial intelligence has made progress in some aspects - synthetic images look more and more realistic, and speech recognition can work in noisy environments - but we still have a long way to go from the general human level AI. For example, artificial intelligence can not understand the true meaning of articles and videos, nor can it deal with unexpected obstacles and interruptions. We still face the challenge that AI has been facing for many years - making AI reliable.

Taking Gato as an example, given a task: add a title to the image of a pitcher throwing a baseball, and the system returns three different answers: "a baseball player throws a baseball on the baseball field", "a man throws a baseball to a pitcher on the baseball field" and "a baseball player is hitting the ball, and a catcher is in a baseball game". The first answer is correct, while the other two seem to contain other players that are not visible in the image. This shows that the Gato system does not know the actual content in the image, but understands the typical content of roughly similar images. Any baseball fan can see that this is the pitcher who just threw the ball - although we expect there to be catchers and batters nearby, they obviously don't appear in the image.

Similarly, dall-e 2 confuses the two positional relationships: "the red cube at the top of the blue cube" and "the blue cube at the top of the red cube". Similarly, the imagen model released by Google in May cannot distinguish between "astronaut riding" and "astronaut riding on horse".

When a system like dall-e goes wrong, you may find it funny, but some AI systems will have very serious problems if they go wrong. For example, a self driving Tesla recently drove directly to the workers with parking signs in the middle of the road. Only after human drivers intervened could it slow down. The auto drive system can recognize human and stop signs separately, but fails to slow down when encountering an unusual combination of the two.

So, unfortunately, AI systems are still unreliable and difficult to adapt quickly to new environments.

Gato has performed well on all the tasks reported by deepmind, but rarely like other contemporary systems. Gpt-3 often writes fluent prose, but it is still difficult to master basic arithmetic, and it has too little understanding of reality. It is easy to produce such absurd sentences as "some experts believe that eating socks helps the brain change its state".

The problem behind this is that the largest research team in the field of artificial intelligence is no longer an academic institution, but a large technology enterprise. Unlike universities, enterprises have no incentive to compete fairly. Their new papers passed the press release without academic review, guided the media coverage, and avoided peer review. The information we get is only what the enterprise itself wants us to know.

In the software industry, there is a special word for this business strategy "demoware", which means that the software design is very suitable for display, but not necessarily suitable for the real world.

The AI products marketed in this way can not be released smoothly or are in a mess in reality.

Deep learning improves the ability of machine to recognize data patterns, but it has three defects: the learning pattern is superficial, not conceptual; The results are difficult to explain; It is difficult to generalize. As Les valiant, a Harvard computer scientist, pointed out, "the core challenge in the future is to unify the forms of AI learning and reasoning."

At present, enterprises are seeking to surpass the benchmark rather than create new ideas. They reluctantly make small improvements with existing technologies rather than stop to think about more basic problems.

We need more people to ask basic questions such as "how to build a system that can learn and reason at the same time", rather than pursuing gorgeous product display.

This debate about AgI is far from the end, and other researchers have joined in. Researcher Scott Alexander pointed out in his blog that Gary Marcus is a legend. What he wrote in the past few years is more or less inaccurate, but it still has its value.

For example, Gary Marcus once criticized some problems of gpt-2. Eight months later, when gpt-3 was born, these problems were solved. But Gary Marcus did not show mercy on gpt-3, and even wrote an article: "openai's language generator doesn't know what it is talking about."

In essence, one view is right at present: "Gary Marcus makes fun of large language models, but these models will become better and better. If this trend continues, AgI will be realized soon."